Artificial intelligence: Exploring common misconceptions and how it works

Two images made by AI (Stable Diffusion 2.1), the two text prompts being “Christmas lights on house” and “Space”

January 2, 2023

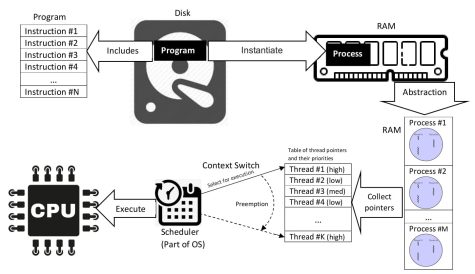

One of the the most common misconceptions about artificial intelligence is its ability to replace a human. As much as our technology has evolved and been improved, the way we interact with it does not allow for most of the basic requirements needed to accomplish certain tasks. We interact with AI on an input and output basis. Meaning that AI can not come up with tasks to do all on its own.

Another common misconception is that all artificial intelligence has the same capabilities, but there are many different types of AI, such as text generation, visual generation and interpretation, or audio generation and interpretation. Due to artificial intelligence’s inability to come up with anything on its own, text generation naturally includes text interpretation, whereas the rest are separate in most instances. Some programs or implementations incorporate multiple types, however, these are less common.

The next misconception is that artificial intelligence needs a supercomputer to be run. In reality, most modern hardware is capable of running the software necessary to create or run an AI In fact one of your devices is likely doing so… Siri is an AI and it runs on all recent Apple devices.

The following paragraph was written by a text generation AI trained on all Beaver Tales articles as of 12/16/22, then given this article as an input to complete.

“AI with an attention is able to cause people to trust it. Some AI think that telling America that ethics about AI with high service isn’t managed as offensive for their health.”

Getting everything that was needed to pull this off was quite tedious. In order to be able to train the AI on the articles on our site, I had to download the entire site to my computer. This took over 12 hours to do. Not due to the speed of the internet but seemingly some kind of DDOS protection. Which is good for the site but slowed down this processing greatly. After which I wrote a program to sort through all the data and pull out just the article titles and content. I decided that it would be best to not include the authors in each of the articles, so the AI would not try to base its writing solely on my own, therefore defeating the purpose of downloading them all. The file returned was great for this purpose after just a few iterations.

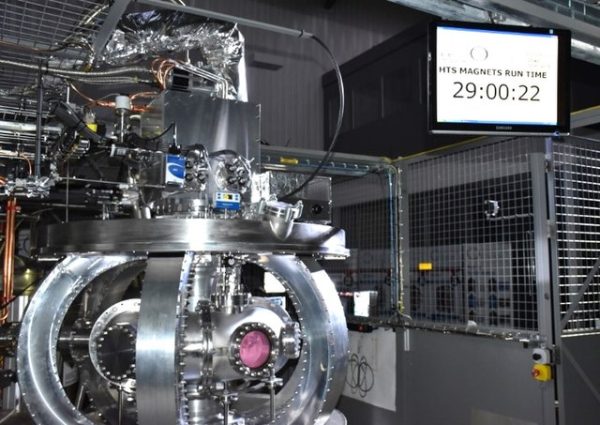

Next up it was time to actually train the AI This process always takes a while most setups use hundreds or thousands of computers to perform very fast training, however, I did not have that kind of budget. The dataset I was using was quite a bit smaller, though. This allowed for my setup to be mostly practical. After optimizing the training process quite a bit and writing the code to properly train it, I began the process. Everything was going according to plan until after 36 hours it tried to save the AI model, at which point it became clear I had forgotten to download a previously completely unmentioned module. As Python was running as a script rather than in IDE mode, the model was just thrown away. After downloading & installing the module and some further modification involving routine saving to help prevent loss in case of crashes , I started the training process over.

This time everything went correctly but took a very long time, not making up for the time lost much. I ended up testing it a few times to get a good idea of where I should stop training in terms of accuracy to the dataset. This is mainly because you do not want a 100% accurate model because then it will have just memorized the dataset and will not have any randomness. This is bad due to not having an input guaranteed to have previously been in the dataset. My various stops being at 41, 64, and finally 88% accuracy, I realized that the dataset did not have much of anything based around AI. Its 21,099 words that it knows were great for making stories and reporting on events, but not so much for writing an informative paragraph about AI.

I was determined to get this done, so I developed an approval system that allowed me to pick word by word what would be used while still being generated by the AI For the 35 words in the quote above, I made only 946 rejections. For context randomly selecting and then rejecting you could have to go through as many as 21,099^35 different variations.

This system allowed the mere 15,084,700 parameter AI seem a little bit closer to something more modern. Most modern artificial intelligences have tens or hundreds of billions of parameters. But this requires a good mix of bigger datasets and increasing the vocabulary size.

For comparison purposes, my artificial intelligence’s dataset size is ~376MB, and it has a vocabulary size of 200 words. A modern AI trained on much more than just one Tesla K40m, such as GPT-J-6B, can easily make those numbers look tiny. With a parameter count of 6,053,381,344, a dataset size of ~825GB, and a vocab size of 50,257 words. That being said, bigger numbers are not always better when you consider model file size. My AI model comes in at right around ~173MB, while GPT-J-6B measures up to ~24.2GB.